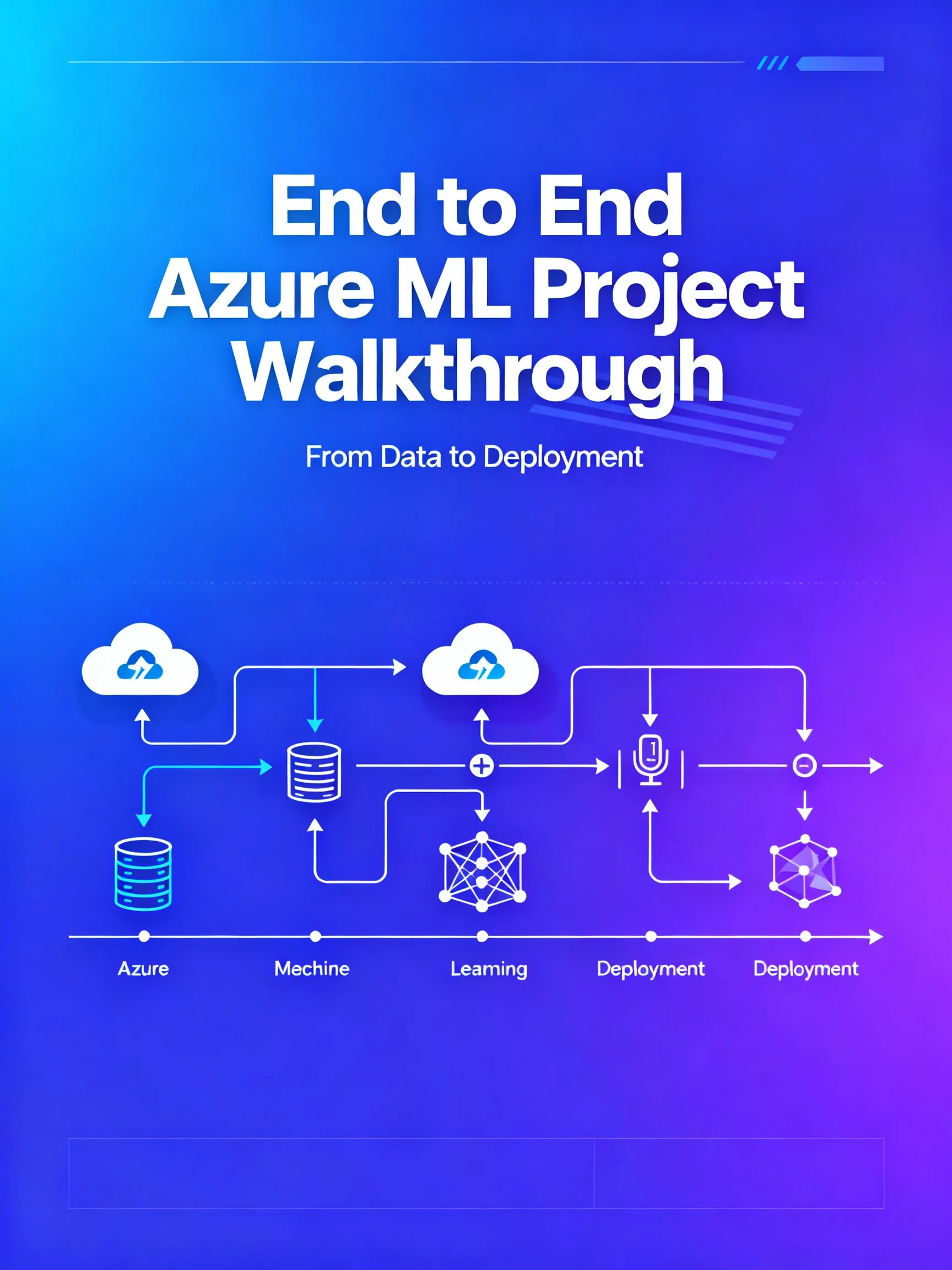

Introduction

The End to End Azure ML Project workflow guides developers from data to production using Azure Machine Learning Studio and Azure DevOps. This walkthrough shows how to prepare data, train and register models in Azure ML Studio, and automate deployments with Azure DevOps pipelines so you can ship reliable models faster.

Project setup and data preparation

Start by creating an Azure ML workspace in the portal or with the CLI. Allocate a Compute Instance for interactive work and a Compute Cluster for scalable training. Use an Azure Storage account or Data Lake and register datasets in the workspace to make experiments reproducible. Practical steps:

- Use the CLI: az ml workspace create -w myworkspace -g myrg

- Create compute: az ml compute create –name cpu-cluster –type AmlCompute –min-instances 0 –max-instances 4

- Register data: upload CSVs to a datastore and call dataset = Dataset.Tabular.from_delimited_files(path) in a notebook

Tip: include a data schema and a validation step that runs during CI to catch schema drift early. Teams adopting structured MLOps often report 50 to 70 percent faster iteration cycles because of reproducible data and environments.

Model training and packaging in Azure ML Studio

Use Azure ML Studio to run experiments visually or orchestrate scripts with the SDK. Create an Experiment, choose your compute target, and define an Environment that pins Conda dependencies and Docker base images so runs are reproducible. Example environment snippet in a YAML file can be used with Azure ML or built into an image for scoring.

- Define a training job: job = experiment.submit(script=’train.py’, compute=’cpu-cluster’, environment=’env:latest’)

- Log metrics and artifacts: use Run.get_metrics() and upload model files to the run

- Register the model: model = run.register_model(name=’cust-churn-model’, path=’outputs/model.pkl’)

Include model validation: create a test suite that runs automatically to verify accuracy, AUC, latency, and resource footprint. Save metadata to the Azure ML Model Registry so downstream systems can select appropriate versions.

Continuous integration with Azure DevOps

Automate training and validation with CI pipelines in Azure DevOps. Configure a service connection to Azure and store secrets in a variable group or Key Vault. A typical CI pipeline runs unit tests, lints code, and triggers an Azure ML training job when new code or data is pushed.

- Common pipeline tasks: run Python unit tests, invoke Azure CLI to submit jobs, and capture run IDs for later stages

- Example task: use the AzureCLI@2 task to run az ml job create –file train-job.yml

- Store artifacts: persist model metadata and run artifacts to the pipeline artifacts or directly to Azure ML

CI best practice: enforce model checks (performance gates) so only runs that meet thresholds progress to CD. This prevents accidental promotion of poor models.

Continuous deployment to real time or batch endpoints

Use CD pipelines to deploy the registered model to an endpoint. For real-time scoring, provision an Azure Container Instance or Azure Kubernetes Service endpoint through Azure ML. For batch scoring, use Batch Endpoints and scheduled jobs.

- Create a deployment YAML or use the AzureML@1 DevOps task to deploy: az ml model deploy –name realtime-svc –model cust-churn-model:1 –ic inferenceconfig.json –dc deploymentconfig.json

- Use canary or blue green strategies: create a new deployment slot, route a percentage of traffic, and monitor performance before full cutover

- Secure endpoints: use managed identities, enable token authentication, and restrict network access with virtual networks

Practical example: an Azure DevOps release pipeline can call an Azure CLI script to import the new model version, spin up a test deployment, run smoke tests against a small sample of requests, and then swap that deployment into production if tests pass.

Monitoring, governance and cost optimization

After deployment, implement monitoring and governance. Integrate Application Insights for latency and error tracking and use Azure ML Model Monitoring to detect data drift and model performance degradation. Set alerts to auto-notify teams when thresholds are crossed.

- Track metrics: fairness, accuracy, latency, and feature distribution

- Set up retraining triggers: schedule pipelines or trigger retraining automatically when drift exceeds a threshold

- Cost tips: use autoscaling on AKS, choose lower-cost compute for batch jobs, and shut down unused compute resources

Maintain an immutable audit trail by recording model lineage in Azure ML and integrating with a governance system or a data catalog. Compliance often requires model explainability artifacts; generate SHAP values or counterfactuals as part of the CI pipeline and store them with the model.

Conclusion

Implementing an End to End Azure ML Project requires reproducible environments, registered datasets, model validation, and CI/CD automation with Azure DevOps. Follow the steps above to train reliably in Azure ML Studio, automate builds and deployments, and monitor models in production. The payoff is faster, safer deployments and clearer model lineage for teams operating in regulated environments.

Leave a Reply