I’ll be revealing a unique AWS cost optimization technique in this blog article, which anyone may utilize to cut their AWS costs. If you’ve ever used AWS, you may be aware that every resource you create generates logs in Cloudwatch, and that these logs are frequently maintained indefinitely. These Cloudwatch logs might not seem like much at first, but as the AWS account expands, they will amount to quite a bit.

Now imagine working for an enterprise having 50-plus AWS accounts. AWS has 22 regions as of writing this post. If you have resources deployed across 50 plus AWS accounts and 22 regions; that brings around 1100 instances to work with, plus the Cloudwatch logs you’ll have to modify. Can’t even think of updating the Cloudwatch logs via the AWS console here 😟

Introducing Boto3

Boto3 is the Python SDK for AWS. It allows you to directly create, update, and delete AWS resources from your Python scripts.

More on boto3 here: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/quickstart.html

Implementation

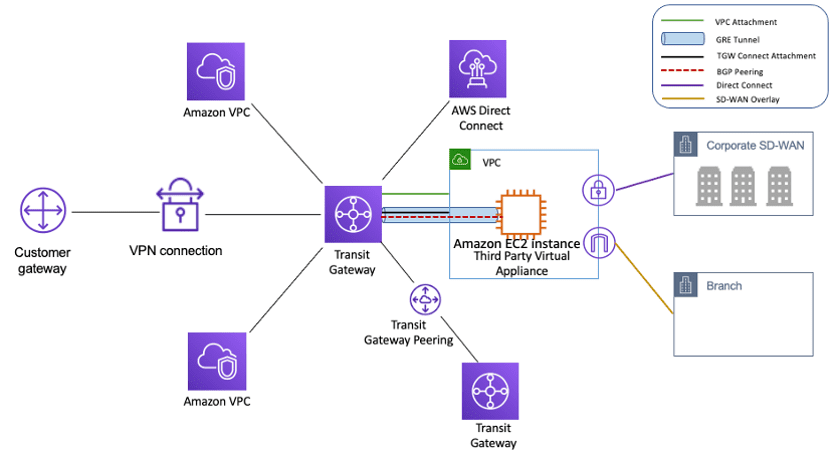

To update the retention of multiple Cloudwatch Logs across multiple accounts and regions using lambda and boto3, you will need to first set up cross-account access and assume a role in the target accounts. You can do this by creating an IAM role in the target accounts with the necessary permissions, and then creating a trust relationship between the role and the account where the lambda function is being run.

Once you have set up the necessary permissions, you can use the following code to update the retention of Cloudwatch Logs in the target accounts:

- Import the necessary libraries and packages:

import boto3

import os

- Define the function to update the Cloudwatch Logs Retention:

def update_cloudwatch_logs_retention(log_group_name, retention_days):

# Get the Cloudwatch Logs client

cloudwatch_logs_client = boto3.client('logs')

# Set the Cloudwatch Logs retention period

cloudwatch_logs_client.put_retention_policy(

logGroupName=log_group_name,

retentionInDays=retention_days

)

- Define the lambda function to update the Cloudwatch Logs Retention across multiple accounts and regions:

def lambda_handler(event, context):

# Get the list of accounts to update the Cloudwatch Logs Retention

accounts = os.environ['ACCOUNTS'].split(',')

# Get the list of regions to update the Cloudwatch Logs Retention

regions = os.environ['REGIONS'].split(',')

# Loop through each account and region

for account in accounts:

for region in regions:

# Get the STS client

sts_client = boto3.client('sts', region_name=region)

# Get the temporary credentials for the account

temp_credentials = sts_client.assume_role(

RoleArn=f'arn:aws:iam::{account}:role/CloudwatchLogsUpdater',

RoleSessionName='CloudwatchLogsUpdaterSession'

)['Credentials']

# Set the temporary credentials in the session

boto3.setup_default_session(

aws_access_key_id=temp_credentials['AccessKeyId'],

aws_secret_access_key=temp_credentials['SecretAccessKey'],

aws_session_token=temp_credentials['SessionToken']

)

# Get the list of Cloudwatch Logs log groups

cloudwatch_logs_client = boto3.client('logs')

log_groups = cloudwatch_logs_client.describe_log_groups()['logGroups']

# Loop through each log group and update the Cloudwatch Logs retention

for log_group in log_groups:

log_group_name = log_group['logGroupName']

update_cloudwatch_logs_retention(log_group_name, retention_days=365)

- Add the necessary environment variables to the lambda function:

ACCOUNTS: <comma-separated list of AWS accounts>

REGIONS: <comma-separated list of regions>

This code will assume a role in each target account, set up a boto3 client with the assumed role credentials, and then get a list of all log groups in the target account. It will then iterate through the log groups and update the retention policy to the specified number of days.

You can then set up a lambda function to run this code on a schedule or as a response to a specific event. For example, you could set up the lambda function to run every day to update the retention of Cloudwatch Logs in all target accounts.

You can adjust the retention period by changing the value of the retention_days parameter in the update_cloudwatch_logs_retention function.

One Response